Overview

The article titled "10 Key Titration Definitions and Examples for Lab Managers" serves as a crucial resource for understanding essential concepts and applications of titration within laboratory environments. It underscores the significance of accurate titration techniques across diverse fields, including pharmaceuticals and environmental testing. Precise measurements are not merely beneficial; they are critical for ensuring quality control, regulatory compliance, and product safety. This emphasis on accuracy is vital for lab managers who are responsible for maintaining high standards in their operations.

Introduction

Titration serves as a cornerstone of analytical chemistry, offering critical insights into the concentration of various substances across multiple industries. Lab managers must master this technique, as it not only ensures compliance with stringent regulatory standards but also safeguards product quality and efficacy.

With a plethora of titration methods available—from Karl Fischer for moisture analysis to complexometric titrations for metal ion detection—how can managers navigate the complexities of each approach to achieve precise results?

This article delves into essential definitions and examples of titration, equipping lab managers with the knowledge necessary to enhance their analytical practices and uphold high standards in their laboratories.

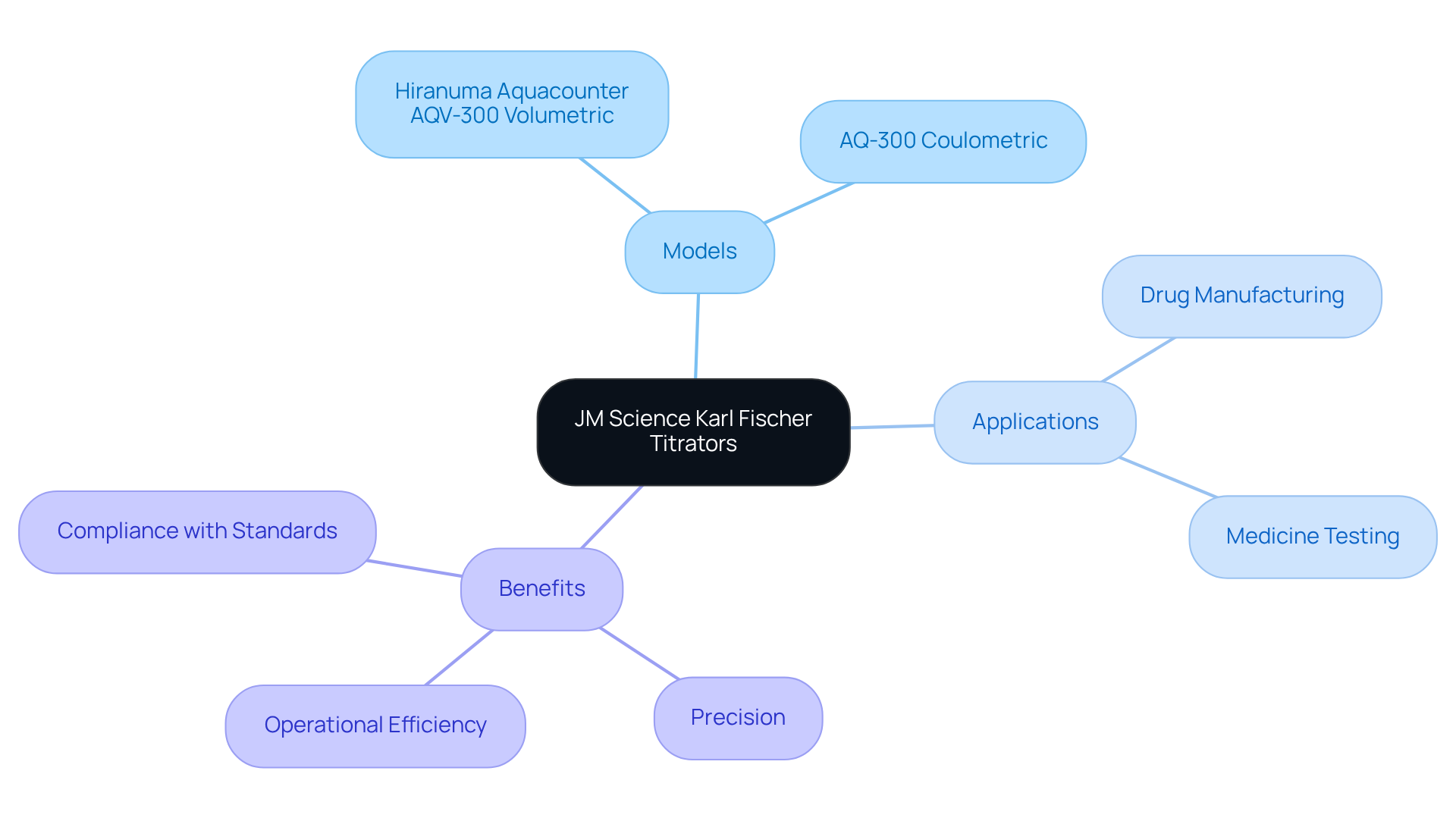

JM Science Karl Fischer Titrators: Precision in Moisture Analysis

Karl Fischer titrators are essential for accurately assessing moisture levels across various samples, particularly within the drug manufacturing sector. JM Science provides cutting-edge models, such as the Hiranuma Aquacounter AQV-300 Volumetric and AQ-300 Coulometric Karl Fischer Titrators. These instruments are meticulously designed for drug and medicine testing, adhering to the stringent standards of the Japanese Pharmacopoeia. Their unique chemical reaction selectively measures water content, yielding results that are critical for quality control and compliance with industry regulations.

As Francis Froes highlights, precision in measuring moisture content is indispensable for maintaining product integrity. Furthermore, the automated features of these titrators significantly enhance operational efficiency, enabling lab managers to concentrate on other vital tasks while ensuring accurate moisture analysis.

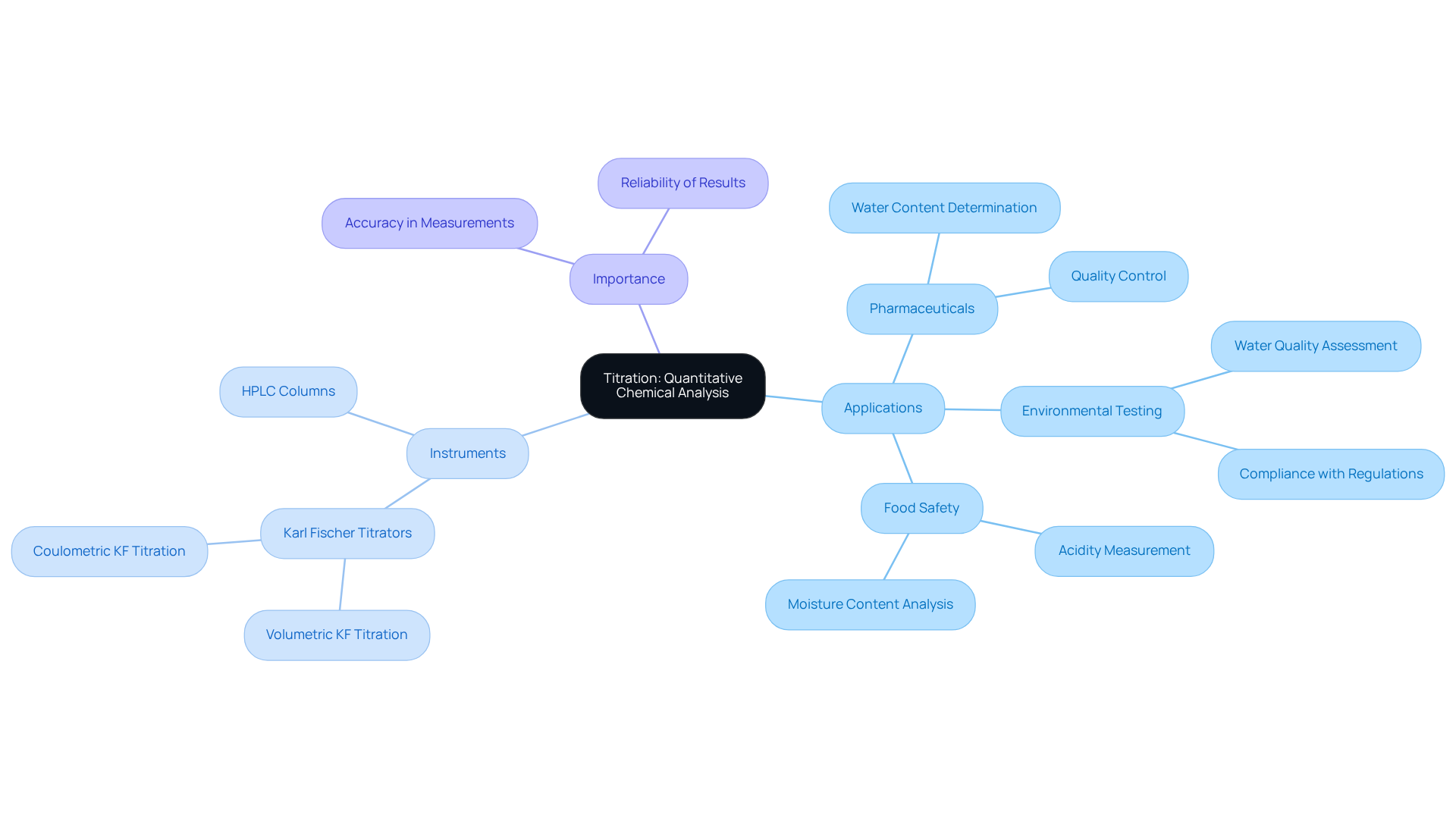

Titration: A Method for Quantitative Chemical Analysis

The titration definition and example demonstrate that titration is a fundamental quantitative analytical technique, crucial for determining the strength of an unknown solution through its reaction with a titrant of known strength. This technique finds extensive application across diverse sectors, particularly within pharmaceuticals, environmental testing, and food safety. JM Science Inc. offers a comprehensive selection of premium titrators, including Karl Fischer titrators, essential for accurately determining the concentration of active ingredients and ensuring product stability. Furthermore, JM Science provides high-performance liquid chromatography (HPLC) columns and accessories, which are critical for various analytical applications.

With over 75% of laboratories employing volumetric techniques for quantitative analysis, the significance of reliable instruments cannot be overstated. For example, Karl Fischer analysis plays a vital role in measuring water content in drugs, crucial for maintaining efficacy and shelf life. According to the 'Karl Fischer Titration Overview' case study, this method has become indispensable for water content determination across multiple sectors, including pharmaceuticals.

In the realm of environmental testing, measurement techniques are employed to evaluate water quality, encompassing pH and contaminant levels, thereby assisting in compliance with environmental regulations. Moreover, acid-base analysis holds a significant role in food security, as it aids in assessing acidity levels in products such as dairy, ensuring appropriate fermentation processes.

As emphasized by industry leaders, the accuracy of measurement methods, bolstered by high-quality instruments from JM Science, is essential for upholding stringent standards in pharmaceuticals and environmental safety. This underscores the critical importance of the titration definition and example within laboratory environments.

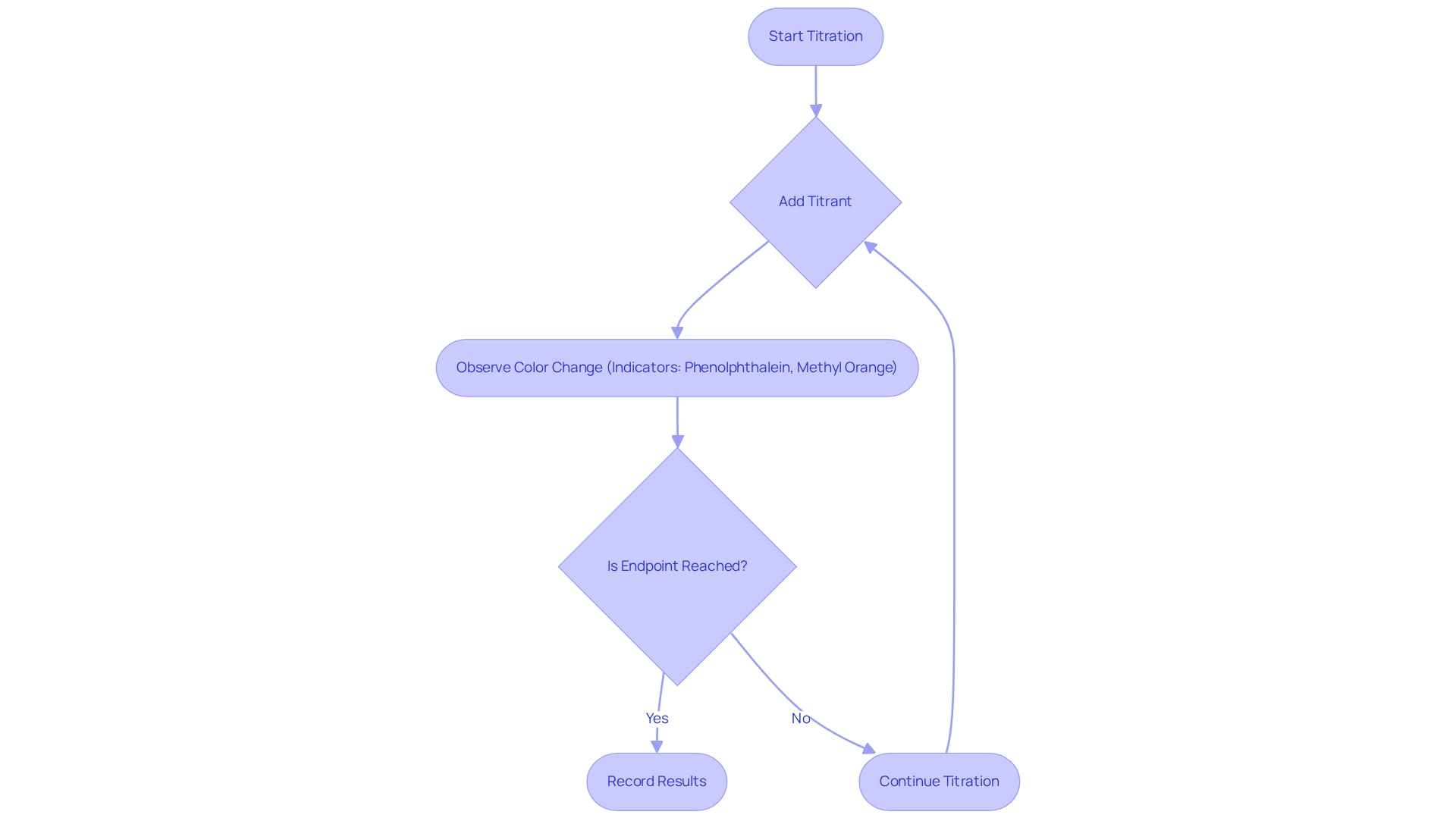

Endpoint: The Point of Reaction Completion in Titration

The endpoint in the titration process, which is part of the titration definition and example, signifies the conclusion of the reaction between the titrant and the analyte, typically indicated by a distinct color change or a specific measurement. Accurate identification of this endpoint, as highlighted in the titration definition and example, is paramount for achieving precise results; even minor deviations can significantly affect concentration calculations.

Common indicators, such as phenolphthalein and methyl orange, are frequently employed in the context of the titration definition and example to signal the endpoint through observable color changes. However, lab managers must remain vigilant, as overshooting the endpoint can result in erroneous outcomes, skewing data and potentially compromising product quality.

Errors in endpoint determination often arise from misjudging color changes due to varying sensitivity among observers and neglecting the effects of temperature on reaction rates. Such inaccuracies can lead to substantial discrepancies in results, underscoring the necessity for meticulous observation throughout the process.

As emphasized by industry experts, ensuring endpoint accuracy is not merely a procedural formality; it is essential for maintaining compliance and achieving reliable outcomes in chemical analysis, which can be illustrated by the titration definition and example.

Equivalence Point: Theoretical Completion of Reaction in Titration

The equivalence point in the titration process represents the theoretical moment when the quantity of titrant added is stoichiometrically equivalent to the amount of analyte in the solution. This critical juncture is distinct from the endpoint, which is determined through experimental observation, often marked by a color change in an indicator. Grasping this distinction is crucial for lab managers, as it directly influences the interpretation of titration outcomes and the accuracy of measurement calculations.

For instance, consider a laboratory scenario where a 25.00 mL sample of a monoprotic acid (HX) is titrated with a sodium hydroxide (NaOH) solution. If 42.23 mL of 0.1255 M NaOH is required to reach the equivalence point, the concentration of HX can be calculated to be 0.2120 M. This calculation relies on the balanced chemical equation, underscoring the significance of stoichiometry in identifying the equivalence point.

Furthermore, another example involves a blood sample of 50.0 mL titrated with a 0.010 M solution of chemical C, requiring 23.2 mL to reach the equivalence point. This illustrates the practical application of equivalence point determination in laboratory settings.

Recent studies have emphasized the importance of accuracy in identifying the equivalence point, as even minor deviations can lead to substantial errors in concentration determinations. For instance, in forensic analysis, correctly identifying the equivalence point can determine whether a patient has consumed a normal amount of medication or an overdose, highlighting the real-world significance of accurate dosage methods.

Chemists stress that a comprehensive understanding of equivalence points, as illustrated in the titration definition and example, is essential for achieving successful results in titration processes. As one expert noted, 'When conducting equivalence point analysis, stoichiometry represents an essential component.' It is also important to acknowledge that this technique is the most common method used to find the equivalence point. This insight reinforces the necessity for lab managers to ensure that their teams are well-versed in the principles of quantitative analysis and the factors influencing equivalence point determination. Different techniques for identifying the equivalence point, such as pH indicators and conductance, also play a critical role in enhancing the precision of results.

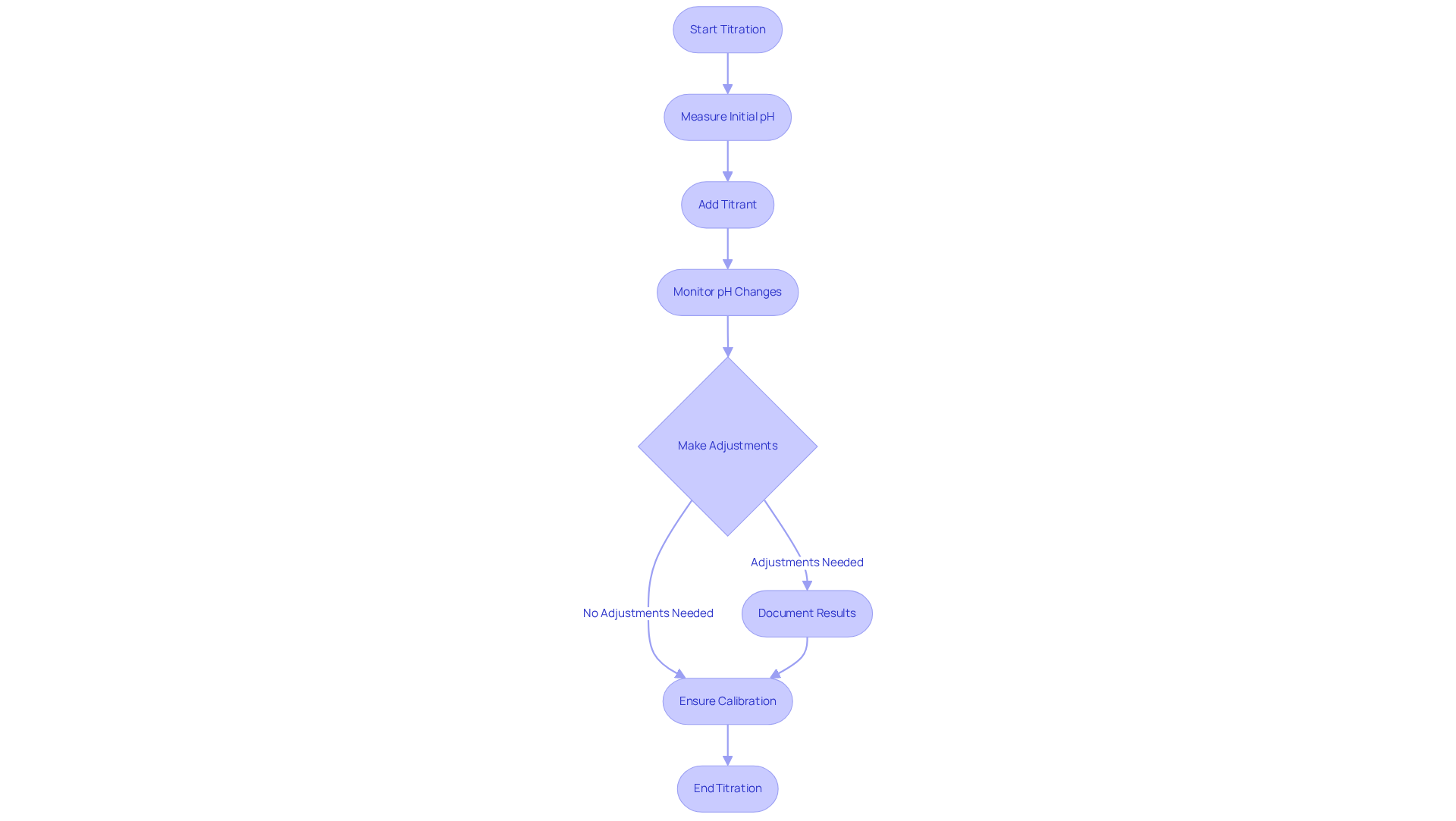

Acid-Base Titration: Determining pH Changes in Solutions

The titration definition and example illustrate that acid-base analysis is a fundamental technique for determining the concentration of acids or bases in solutions through the neutralization process with a titrant of known concentration. During this critical procedure, pH changes are meticulously monitored, typically employing a pH meter or indicator, which is essential for ensuring the accuracy of medication formulations. In the drug manufacturing sector, precise measurement is vital, as it directly influences the proper dosage and formulation of medications, impacting their effectiveness and safety.

Recent data indicates that over 55% of laboratories utilize acid-base analysis as a quality control method, underscoring its significance in drug manufacturing. The importance of pH monitoring is paramount; it is critical for ensuring product stability, solubility, and bioavailability. Regulatory agencies, including the FDA and EMA, have instituted stringent guidelines for pH measurement, compelling drug manufacturers to adopt advanced pH measurement technologies, such as those provided by JM Science Inc., to ensure compliance and uphold product quality.

Given that pH levels can significantly affect the stability and effectiveness of active medicinal compounds, real-time observation during the process facilitates immediate adjustments, enhancing the reliability of results. Digital pH monitoring systems, featuring automatic calibration and data logging, streamline this process further, improving accuracy and traceability. JM Science Inc.'s premium titrators, HPLC columns, and advanced digital monitoring solutions exemplify the high-quality equipment essential for these processes.

Michael Luo emphasizes, "The importance of precise and dependable pH measurement in the drug industry cannot be overstated, as it directly influences the quality, safety, and effectiveness of medicinal products."

In conclusion, lab managers must excel in performing acid-base measurements, as they play a crucial role in quality control and regulatory compliance within the drug industry. To enhance their titration processes, lab managers should embrace best practices such as:

- Regular calibration of pH meters

- Thorough documentation of measurement methods

- Integration of advanced digital monitoring systems

These practices are illustrated in the titration definition and example provided by JM Science Inc.

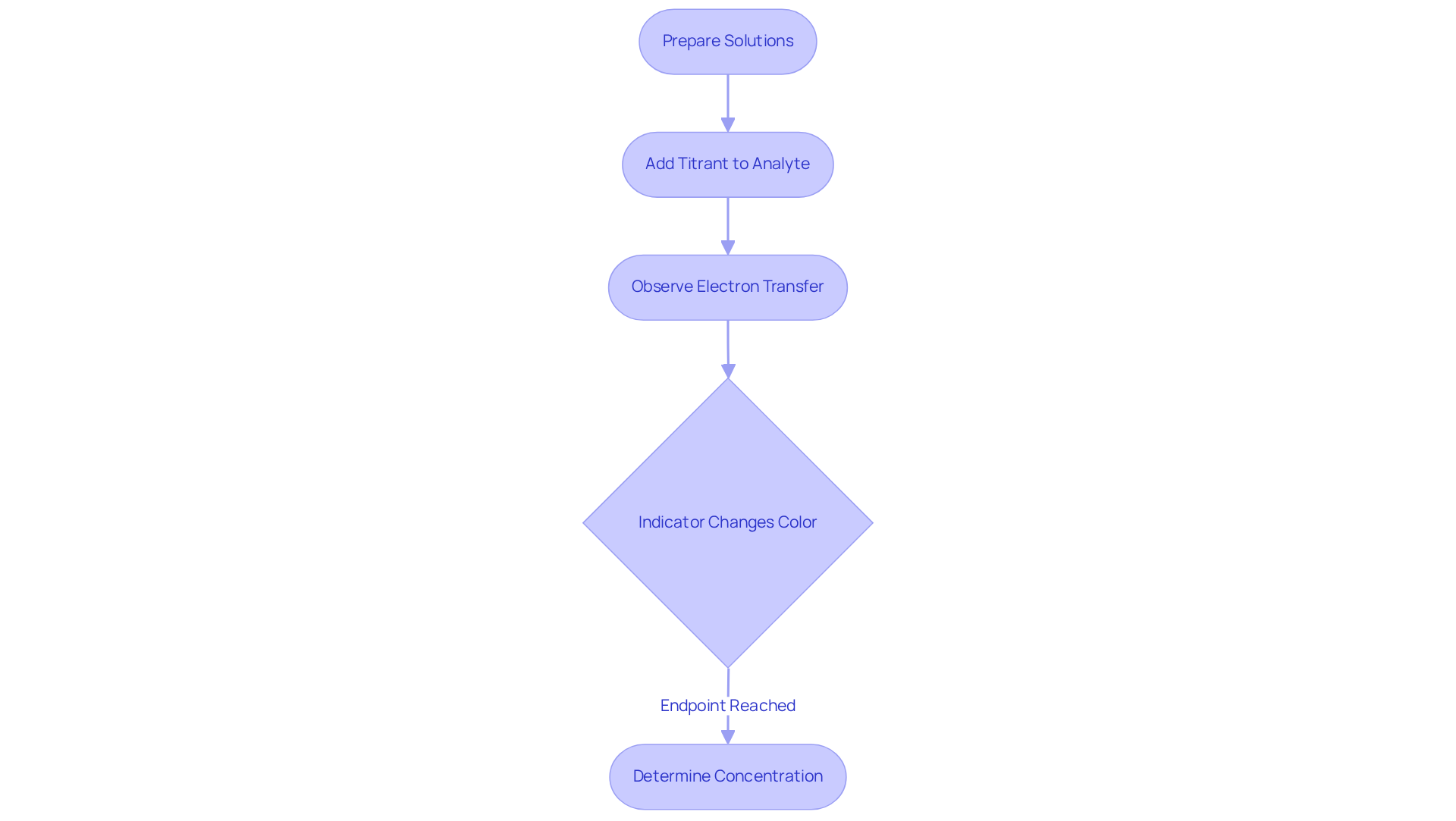

Redox Titration: Measuring Electron Transfer in Reactions

Redox titration is a pivotal analytical technique, and its titration definition and example highlight how it quantifies the transfer of electrons between the titrant and the analyte. This method, which can be explained through the titration definition and example, is essential for determining the concentration of oxidizing and reducing agents in various solutions, particularly in the realm of pharmaceutical analysis. Here, precise measurements are crucial for ensuring product safety and efficacy. For instance, redox analysis plays a vital role in accurately gauging hydrogen peroxide levels in sanitizers, which are critical for effective disinfection procedures.

In this context, a study revealed that the titer value for cerium sulfate, a commonly used titrant, was determined to be 1.00505, with a relative standard deviation of 0.171%, based on six measurements. This underscores the reliability of redox titration in quality control processes. Furthermore, the incorporation of indicators that change color at specific oxidation states is essential for signaling the endpoint of the titration, which serves as a titration definition and example, thereby ensuring accurate results.

Lab managers must also recognize the significance of redox analysis in environmental testing. This technique assists in evaluating the concentration of pollutants, ensuring adherence to safety standards. The ability to measure electron transfer effectively not only enhances analytical precision but also supports informed decision-making across various sectors, including pharmaceuticals and environmental science. It is crucial to acknowledge, however, that human errors can significantly impact measurement outcomes. This highlights the necessity for meticulous technique and diligent record-keeping in laboratory practices.

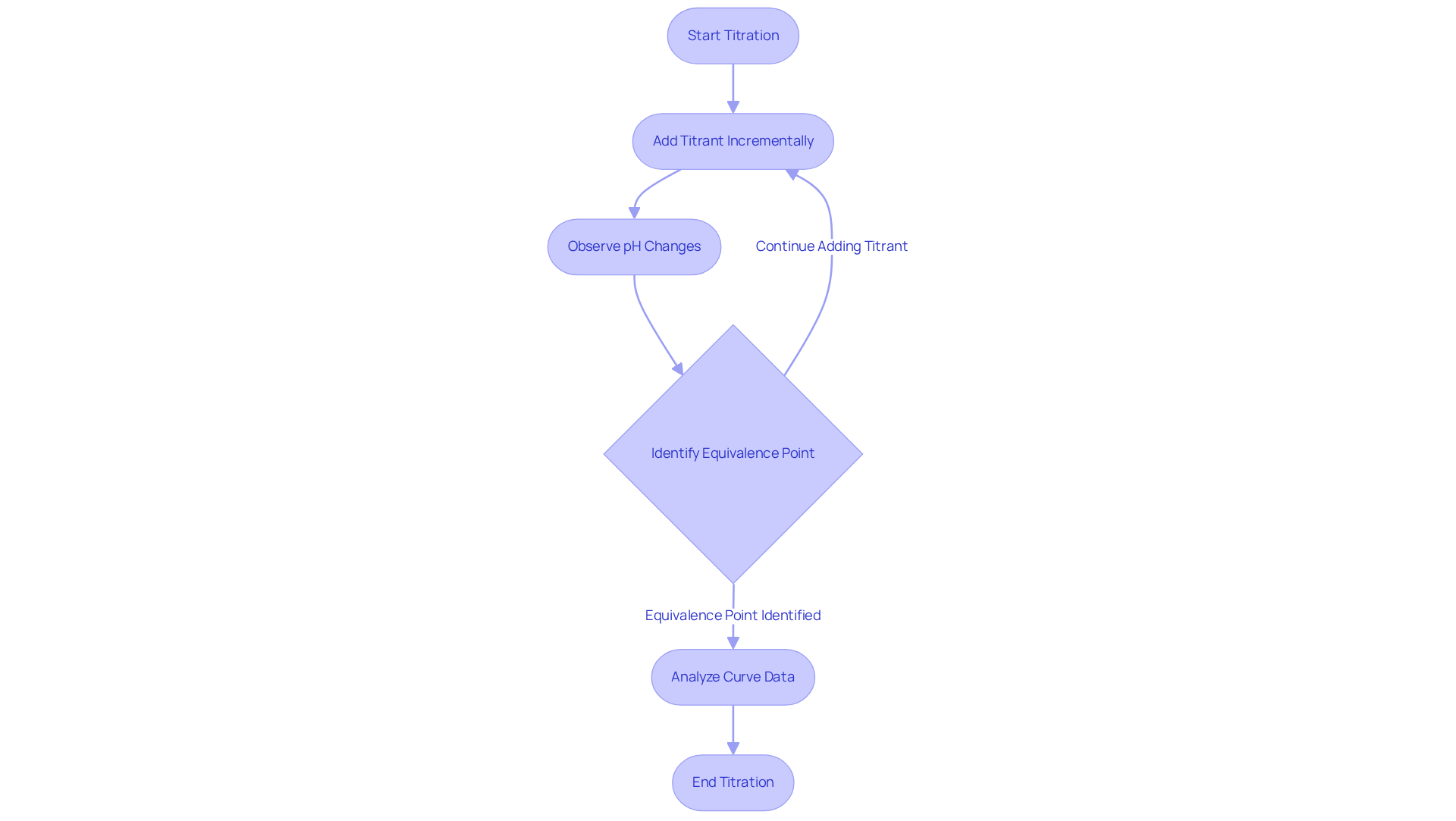

Titration Curves: Visualizing Reaction Progress and pH Changes

Titration curves are indispensable graphical representations that illustrate the change in pH or potential as a titrant is incrementally added to an analyte, providing a clear titration definition and example. These curves not only depict the reaction's progression but also serve as critical tools for identifying the equivalence point, illustrating the titration definition and example, where the amount of titrant equals the amount of substance in the analyte. By thoroughly examining the form and features of the curve, lab managers can gain valuable insights into the solution's buffering capacity and the overall efficiency of the process. This understanding is essential for enhancing measurement procedures, ensuring precision in assessments, and achieving accurate outcomes.

For instance, the titration definition and example in a typical acid-base analysis may include a curve that exhibits a steep slope at the equivalence point, indicating a rapid change in pH. This sharp transition serves as a clear signal for lab managers to accurately recognize the endpoint of the process. Moreover, the examination of curve data can reveal significant details regarding the existence of multiple equilibria in complex solutions, guiding modifications in measurement strategies.

The significance of acid-base curves extends beyond theoretical understanding; they are vital in practical applications across various sectors. For example, in drug laboratories, measurement curves are employed to ascertain the concentration of active components in formulations, ensuring compliance with regulatory standards. According to a study, this analytical technique is extensively utilized in sectors such as pharmaceuticals, where it aids in determining the quantity of active components, thereby ensuring product quality and safety.

Furthermore, automated dosing systems, such as those integrated with LabX™ Titration software, enhance efficiency and precision in dosing processes. By mastering the interpretation of these curves and employing contemporary measurement techniques, lab managers can significantly improve the reliability of their analytical results, ultimately contributing to enhanced product quality and safety.

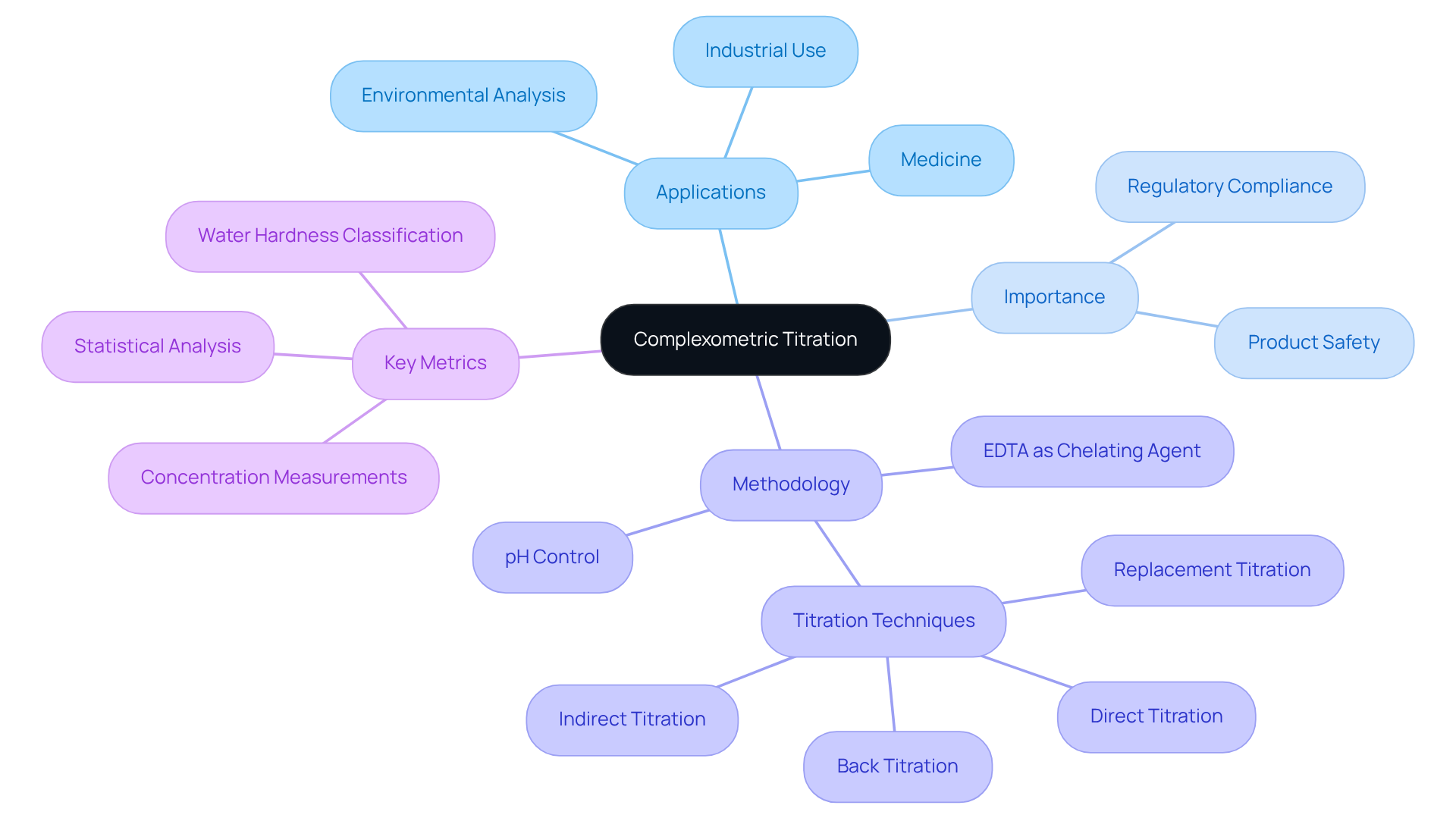

Complexometric Titration: Analyzing Metal Ion Concentrations

Complexometric analysis stands as a cornerstone analytical method, critical for measuring metal ion levels across various solutions, primarily utilizing ethylenediaminetetraacetic acid (EDTA) as the chelating agent. This methodology holds particular importance in fields such as environmental analysis and medicine, where the precise monitoring of metal ions is vital for ensuring product safety and regulatory compliance. Notably, approximately 85% of U.S. homes contend with hard water, underscoring the widespread application of complexometric analysis in assessing metal ion concentrations and its essential role in quality control processes.

The application of EDTA in complexometric titration definition and example extends beyond laboratory confines; it is equally relevant in practical scenarios, such as water hardness evaluation and the assessment of metal content in pharmaceuticals. For example, the titration definition and example can be illustrated by the determination of calcium and magnesium ions in water samples, which is routinely conducted using EDTA, yielding critical information for water treatment facilities and industrial operations. Water hardness is quantified in mg/L of calcium carbonate (CaCO₃), a key metric for understanding water quality.

Monitoring metal ion concentrations is crucial in the medical field, as even trace amounts of certain metals can affect drug efficacy and safety. As emphasized by industry experts, "the ability to accurately measure these concentrations is fundamental to maintaining high standards in pharmaceutical manufacturing." Consequently, laboratory managers must possess expertise in complexometric techniques to guarantee reliable outcomes and adherence to rigorous regulatory standards. Furthermore, maintaining the solution's pH is imperative for establishing defined equilibria in complexometric analyses, thereby enhancing the precision of the measurements.

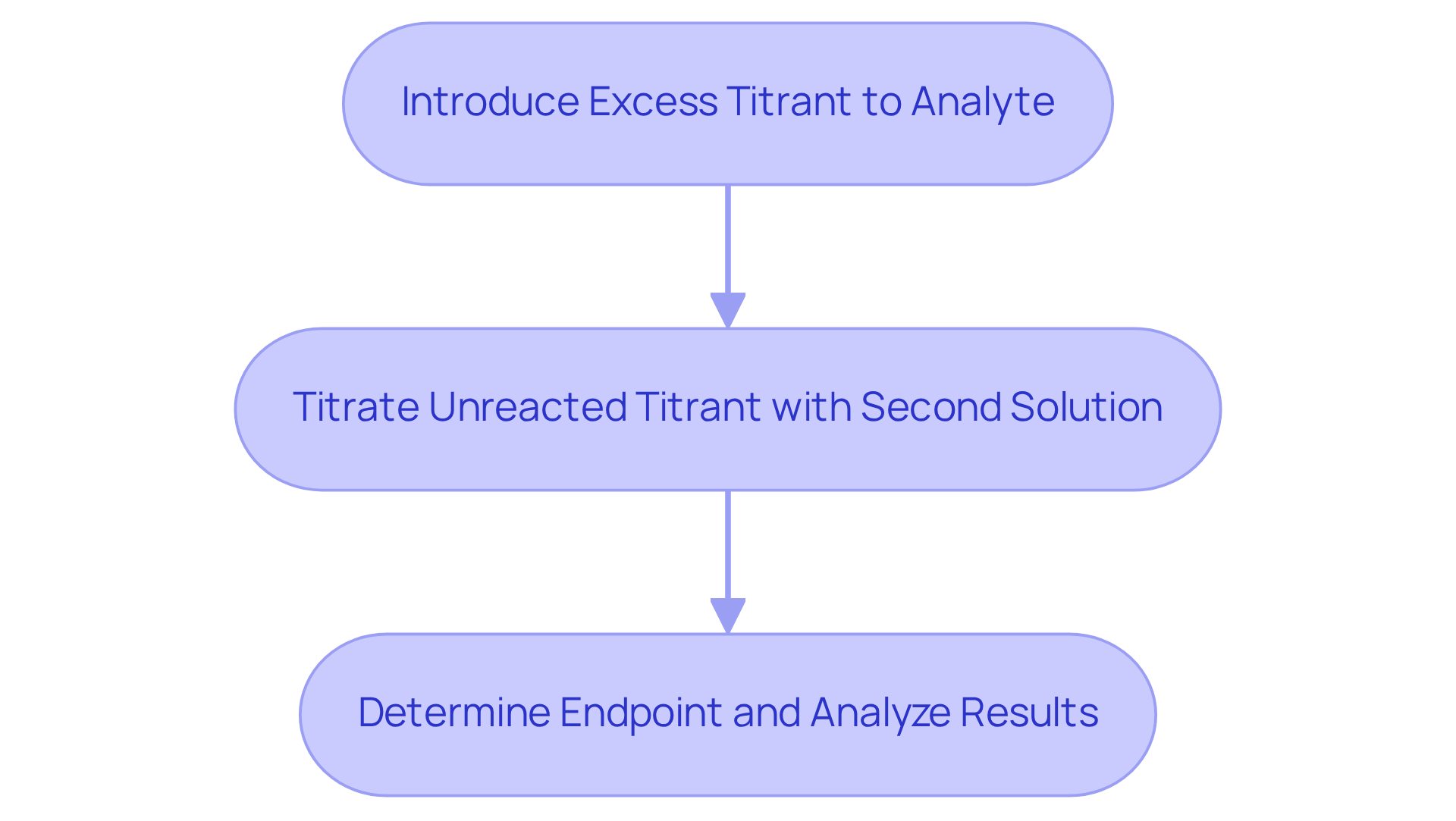

Back Titration: An Indirect Approach to Quantitative Analysis

Back titration is a method employed when determining the endpoint of a direct measurement proves challenging. In this technique, an excess of titrant is introduced to the analyte, followed by titrating the unreacted titrant with a second solution. This approach is particularly advantageous for analyzing solid samples or when the reaction proceeds slowly.

It is crucial for lab managers to familiarize themselves with back titration methods, as this knowledge ensures accurate results in demanding analytical scenarios. Mastery of this technique not only enhances analytical precision but also reinforces the credibility of laboratory practices.

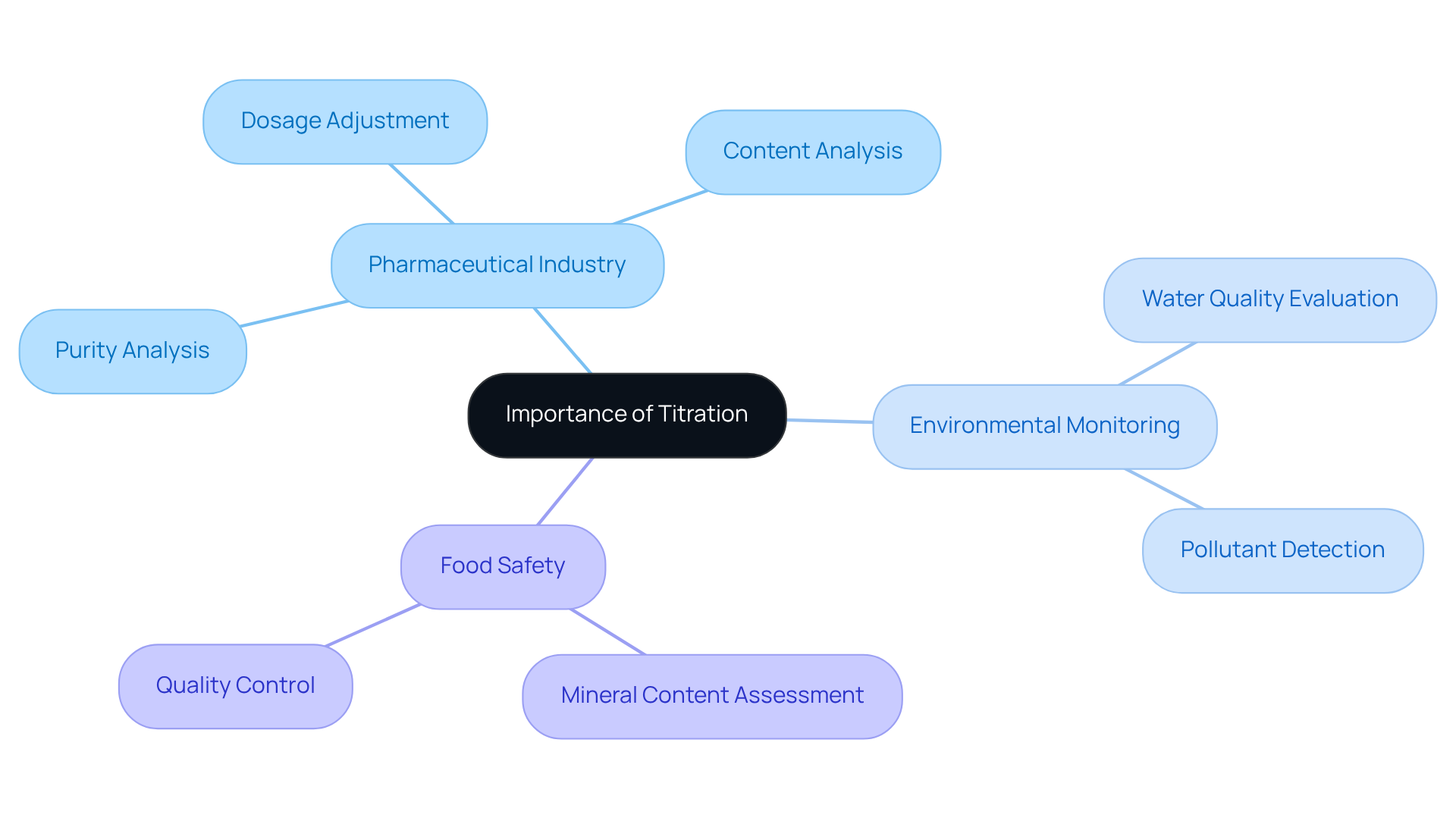

Importance of Titration: Applications in Chemistry and Industry

The titration definition and example highlight its significance as a fundamental method in analytical chemistry, playing a crucial role across various sectors such as medicine, environmental testing, and food quality. Its applications are vital for quality control, ensuring that products adhere to security and efficacy standards. In the pharmaceutical industry, for instance, precise measurement is essential for confirming the concentration of active ingredients. Acid-base measurements significantly contribute to refining raw substances by selectively interacting with contaminants like salts and metals. This meticulous process is imperative, as even minor deviations can affect patient safety and regulatory compliance.

Recent studies reveal that approximately 80% of pharmaceutical companies rely on dosage adjustment techniques to meet stringent regulatory requirements, highlighting the method's significance in maintaining product integrity. Industry leaders emphasize that this technique not only enhances the precision of analytical outcomes but also streamlines compliance procedures. Jessica Clifton, a director in the field, notes that the titration definition and example highlight this method as a common technique used in analytical chemistry to determine the concentration of an unknown solution by gradually adding a solution with a known concentration.

Moreover, this analytical method is increasingly applied in environmental monitoring, where it assists in evaluating water quality by identifying contaminants such as acid rain and industrial discharges. This application is crucial for safeguarding ecosystems and human health. In the food sector, measurement techniques are employed to assess mineral content and ensure product consistency, which is vital for regulatory adherence and consumer safety.

The adaptability of titration extends to innovative applications, including automated systems that enhance efficiency and accuracy in analytical processes. JM Science Inc. provides a range of high-quality titrators, HPLC columns, and accessories that facilitate these advancements, enabling the concurrent testing of duplicate samples and significantly reducing analysis duration. Additionally, JM Science offers competitive pricing on its products, making them an appealing choice for pharmaceutical lab managers. As the landscape of analytical chemistry evolves, the importance of titration in ensuring product safety and efficacy remains paramount, solidifying its status as an indispensable tool for lab managers across various sectors.

Conclusion

Titration stands as a cornerstone in analytical chemistry, offering essential methodologies for precise quantitative analysis across diverse sectors, including pharmaceuticals, environmental testing, and food safety. This technique not only facilitates the accurate measurement of concentrations but also ensures compliance with stringent regulatory standards, underscoring its critical role in maintaining product integrity and safety.

Throughout this article, various titration methods have been explored, including:

- Karl Fischer titration for moisture analysis

- Acid-base titration for pH adjustments

- Redox titration for electron transfer measurements

- Complexometric titration for metal ion concentrations

Each method underscores the importance of precision and accuracy in laboratory practices, where even minor discrepancies can lead to significant consequences in product quality and safety. The distinction between endpoints and equivalence points has also been emphasized, illustrating how meticulous observation and understanding of these concepts are vital for successful titration outcomes.

As laboratory managers continue to navigate the complexities of chemical analysis, embracing best practices and advanced technologies becomes paramount. Implementing regular calibration, leveraging automated systems, and ensuring comprehensive training for staff can significantly enhance the reliability and efficiency of titration processes. By prioritizing these measures, laboratory professionals can not only uphold the highest standards of analytical accuracy but also contribute to the broader goals of safety and compliance within their respective industries.